AI That Knows Its Boundaries: Security-Aware Agents

The Challenge: AI That Answers What It Shouldn’t

Many AI tools can access your data—but can they enforce who’s allowed to see what?

- Most models treat all users equally, ignoring access control.

- Sensitive data (like compensation or HR policies) risks being overexposed.

- Without proper enforcement, trust in the agent—and in your data—breaks down.

What if your AI assistant respected user permissions and enforced row-level security out of the box?

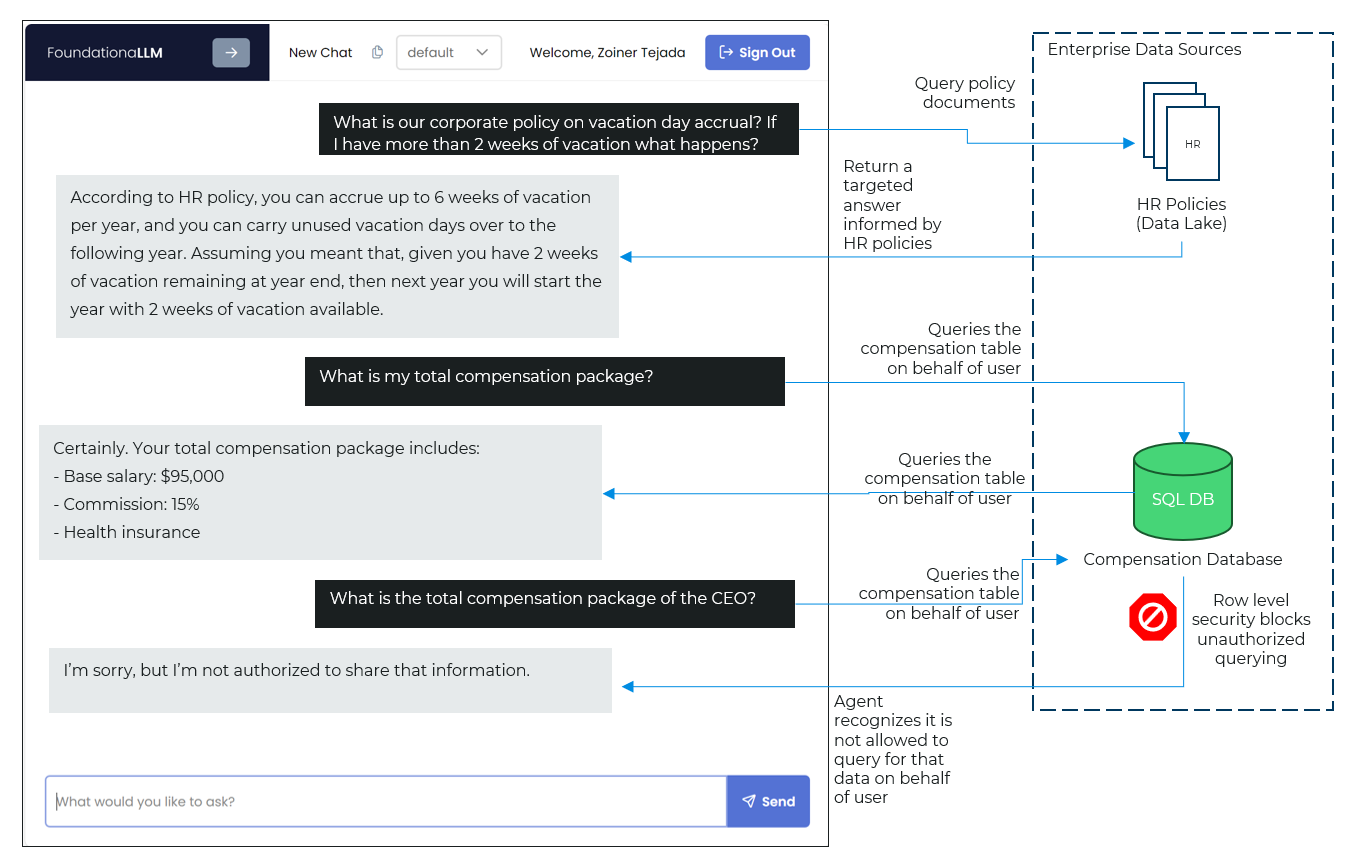

The Solution: FoundationaLLM Enforces Enterprise Permissions

FoundationaLLM is a platform, not a SaaS product. It runs entirely within your environment and allows you to build LLM-agnostic, policy-aware agents that integrate directly with your enterprise systems. These agents can respect row-level security, interpret access control logic, and respond appropriately based on the user's identity—across both structured and unstructured data. Whether you're using GPT-4, Claude, or open-source models, FoundationaLLM ensures your agents operate within your security and governance frameworks.

FoundationaLLM integrates directly with your enterprise security controls to ensure users only see what they’re authorized to see—nothing more, nothing less. This means faster deployment, fewer custom integrations, and significant cost savings over building proprietary permissioning logic internally—all while reducing compliance risk and increasing trust in enterprise AI systems.

How It Works

Understands User Context – Every query is scoped to the user’s role and identity.

Queries Documents and Databases – Pulls policy docs, compensation data, and more—only if authorized.

Evaluates Row-Level Security (RLS) – If access is denied, the agent recognizes it and doesn’t guess.

Responds Responsibly – The agent explains why it can’t answer, instead of returning risky or incorrect information.

The Technical Hurdles

and How We Solve Them

Hurdle: LLMs don’t inherently know enterprise permissions.

Solution: FoundationaLLM leverages your SQL database’s native row-level security and policy layers.

Hurdle: Most systems don’t detect when a query is blocked.

Solution: Our agents interpret “no result” as a permissions issue—and reply accordingly.

Hurdle: Secure, multi-source data access is hard to govern.

Solution: FoundationaLLM respects permissions across both structured (databases) and unstructured (docs) data sources.

The Business Impact: Trustworthy AI, Compliant by Design

Prevent Data Leaks – Never share compensation, HR, or financial data with the wrong person—reducing the risk of regulatory exposure and internal data breaches.

Build Organizational Trust – Employees and leaders know the agent only returns what’s permitted—improving adoption and confidence across the business.

Support Governance and Compliance – Enforce your data policies naturally, without custom integrations—accelerating deployment and lowering engineering burden.

Confident Self-Service – Users get the answers they need—and nothing they shouldn’t—enabling faster decision-making while preserving data integrity.

Why FoundationaLLM?

Integrated with row-level security for SQL-based systems

Understands when not to answer

Respects enterprise access control and policy layers

Works across documents, data lakes, and databases

Fully deployed in your Azure environment

Ready to Build Trust into Every Answer?

Let FoundationaLLM enforce your access controls, protect sensitive data, and respond responsibly—without custom rules or manual oversight.

Empower your teams with AI they can trust—and governance your business can stand behind.

Get in Touch